Chapter 7 Validation

7.1 Manual Counts Comparison

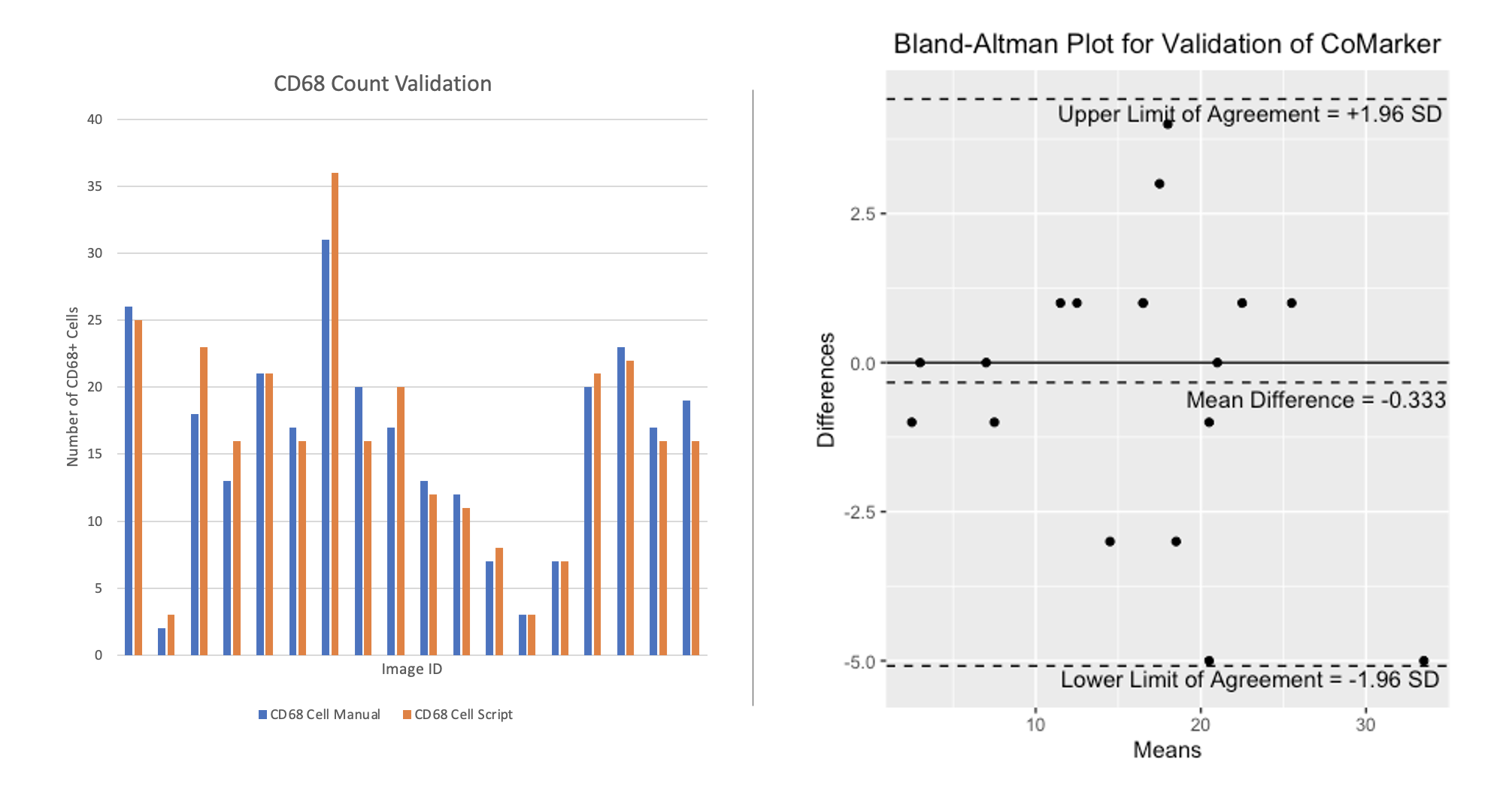

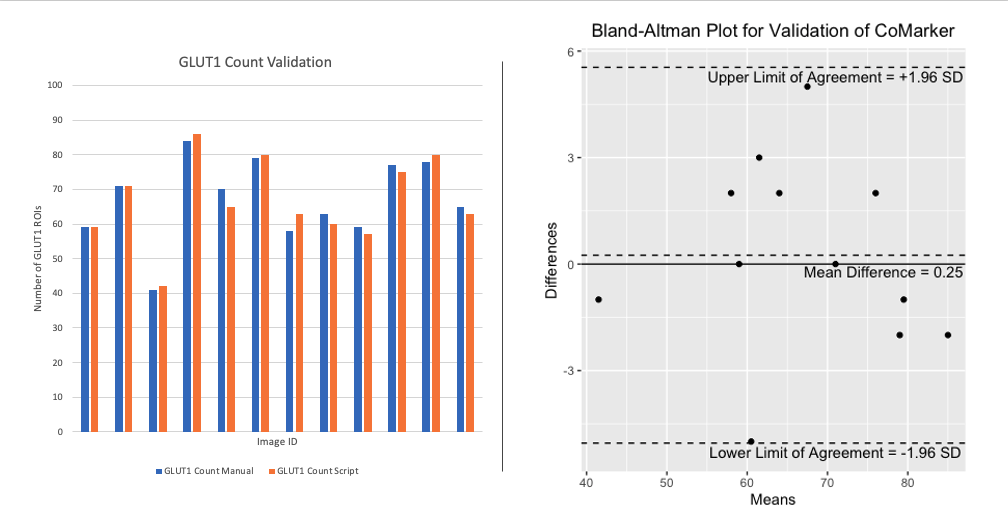

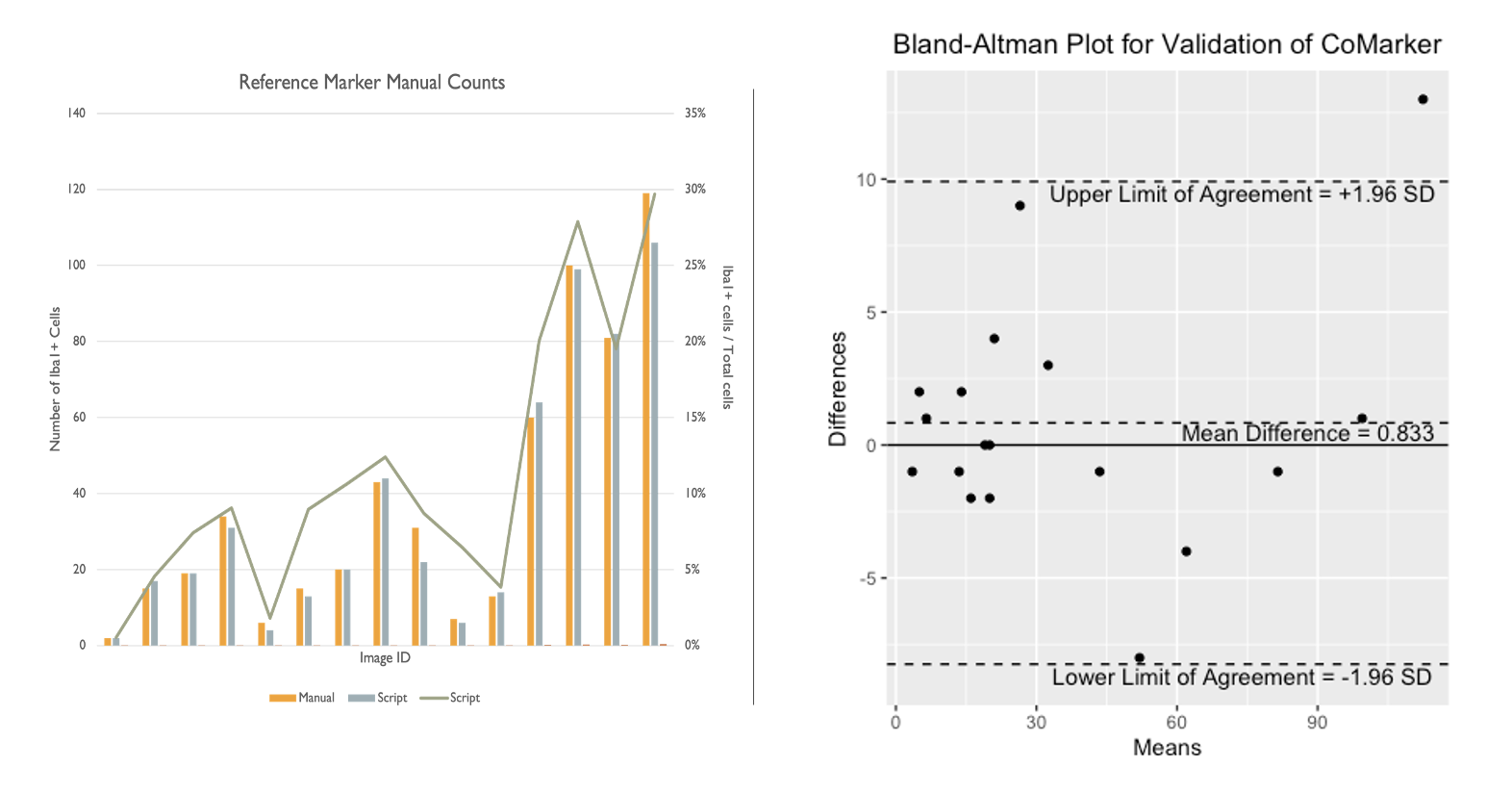

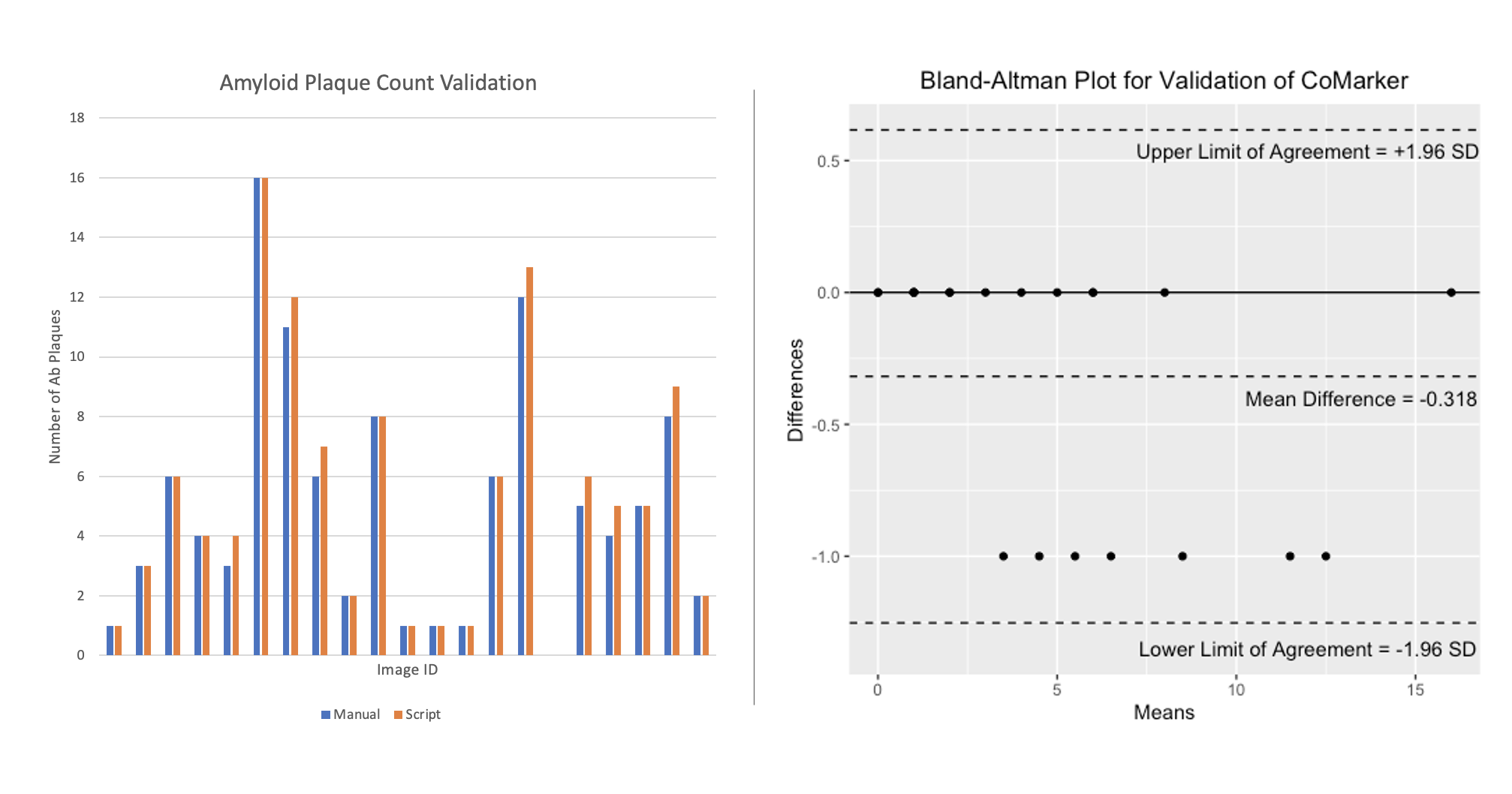

Here we present a comparison between the script and blind manual counts. The aim of the code is to imitate manual counts while speeding up the time taken to analyse collections of images. Counts were obtained by both internal and external researchers, with the method of analysis being to count signal within 2 microns of DNA (except for ROI counts). Images IDs have been removed from the plot for privacy purposes. The results from these comparisons show that the script counts marker signal/positive cells accurately, and we aim to add more manual count data to the comparison in the near future.

The comparisons below compare counts produced by CoMarker and manual counts for the same images. The bar charts on the left display the paired counts, while the Bland-Altman plots on the right show the difference between the count values for each image with respect to a 95% confidence interval.

7.1.5 Intraclass Correlation

Intraclass correlations were calculated between counts of Ab, Iba1 and CD68 markers made by an internal human counter, an external human counter, and CoMarker.

7.1.5.1 Single Score Intraclass Correlation

Model: twoway; Type: agreement; Raters = 3; n = 35

ICC(A,1) = 0.993; p = 1.7e-67

95%-Confidence Interval for ICC Population Values: 0.987 < ICC < 0.996

We can conclude that there is an excellent level of within-class correlation, both between each human counter, and between each counter and CoMarker.